Project area/S

- Pulsars and Fast Transients

Project Details

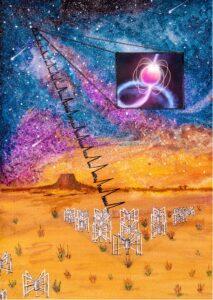

An artist’s impression of the Murchison Widefield Array detecting a pulsar signal. Processing the data collected for SMART to find such pulsars is like finding a needle in a haystack. Having a robust database linked to our processing workflows is critical for this to become a reality, and that is where this project comes in! Credit: Dilpreet Kaur (CSIRO).

The Southern-Sky MWA Rapid Two-Metre (SMART) pulsar survey is an ambitious program which is moving into its next stage of processing, with five discoveries so far and many more around the corner. Given the large data volume and number of linked processing tasks, the SMART pipeline (Nextflow-based) manages and stores a vast amount of metadata and information about the status of various stages of analysis at any given moment.

We have developed a database schema (Postgres) and basic APIs that can nominally handle this kind of information, which is hosted online at Data Central. The database is ready for use, and the workflows are nearly ready to be deployed at a scale suitable on high-performance computing systems. The missing link is the software required to have the database and workflows interact!

In this project, the student will develop and integrate a series of software tools that can be used to gather data from and send data to the database as the automated SMART workflow crunches through over three petabytes of pulsar search data. This will help streamline the processing workflow enormously, and thus accelerate the rate of pulsar discoveries and the science that can be extracted from them.

Student Attributes

Academic Background

Enrolment in any Computing or Data Science course is appropriate. The applicant should have an interest in astrophysics, but it is not required to be enrolled in the astronomy stream.

Computing Skills

Relational databases (esp. Postgres), Unix operating systems, Python (esp. Django), Bash

Training Requirement

Nextflow, using supercomputing systems

Project Timeline

| Week 1 | Inductions and project introduction |

| Week 2 | Initial presentation |

| Week 3 | Familiarisation with current database structure + creating a mock database |

| Week 4 | Generating “fake” data and identifying required interactions with mock database |

| Week 5 | Writing/testing Python functions to interact with the test database |

| Week 6 | Testing/updating Python functions to interact with deployed database |

| Week 7 | Integrating scripts into SMART workflows |

| Week 8 | Integrating scripts into SMART workflows (+ identifying left-over gaps) |

| Week 9 | Final presentation |

| Week 10 | Final report |